StrainNet is a novel deep-learning approach for measuring strain from medical images. Understanding how materials and structures deform is essential for making improvements and optimizing designs in engineering applications, and in biology, accurately measuring tissue deformation in the human body is important for assessing its state in disease and health. However, accurately acquiring tissue deformation in vivo is difficult. The traditional image-based strain analysis techniques used in combination with medical imaging systems such as MRI and ultrasound are often limited by noise, out-of-plane motion, and image resolution. StrainNet is designed to overcome these limitations and provide a more complete understanding of the mechanics of tendons under various loads.

• Digital Image Correlation (DIC) and Direct Deformation Estimation (DDE) are traditional image-based strain analysis techniques that have been used in the past to measure deformation.

However, these techniques are often limited by noise, out-of-plane motion, and image resolution, particularly when applied to medical images in challenging, in vivo settings.

• StrainNet is a novel deep-learning approach that utilizes a training set based on real-world clinical observations and image artifacts to overcome the limitations of traditional image-based strain analysis techniques.

StrainNet uses a two-stage architecture, with a DeformationClassifier and three separate networks (TensionNet, CompressionNet, and RigidNet) to predict the strain. The DeformationClassifier determines if the image is undergoing tension, compression, or rigid body motion, and the image is then passed into the corresponding network for strain prediction. The training set for StrainNet was developed to emulate real-world observations and challenges, and the model was trained and tested on both synthetic and real images of flexor tendons undergoing contraction in vivo.

The results of our study demonstrate the effectiveness and potential of using deep learning for image-based strain analysis in challenging, in vivo settings.

On both synthetic test cases with known deformations and real, experimentally collected ultrasound images of flexor tendons undergoing contraction in vivo, StrainNet outperforms traditional techniques such as DIC and DDE, with median strain errors 48-84% lower than DIC and DDE.

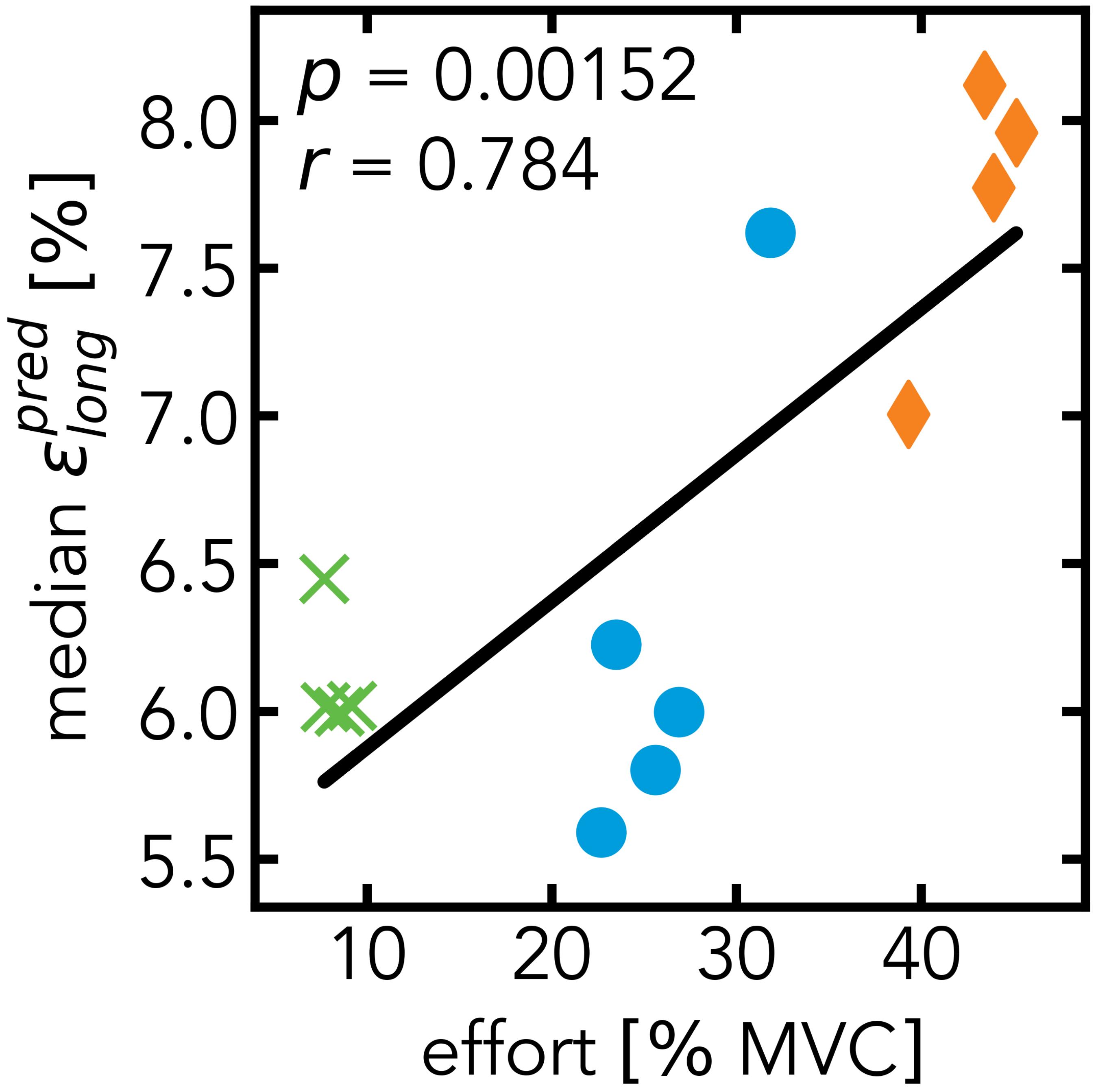

Additionally, StrainNet revealed strong correlations between tendon strains and applied forces in vivo, highlighting the potential for StrainNet to be a valuable tool in the assessment of rehabilitation or disease progression.

In the following video, you'll see StrainNet's predicted strain distribution for three levels of muscle contraction: 10%, 30%, and 50% of maximum voluntary contraction (MVC).

While our study demonstrates the effectiveness and potential of StrainNet, there are still limitations and areas for future work. For example, the approach may not be well-suited for certain types of tissue or deformation, and there is still room for improvement in terms of accuracy and robustness. Future work will focus on expanding the applicability of the approach and improving its accuracy and generalizability.

@article{huff2024strainnet,

title={Deep learning enables accurate soft tissue tendon deformation estimation in vivo via ultrasound imaging},

author={Huff, Reece D and Houghton, Frederick and Earl, Conner C and Ghajar-Rahimi, Elnaz and Dogra, Ishan and Yu, Denny and Harris-Adamson, Carisa and Goergen, Craig J and O’Connell, Grace D},

journal={Scientific Reports},

volume={14},

number={1},

pages={18401},

year={2024},

publisher={Nature Publishing Group UK London},

}

This study was supported by the National Institutes of Health (NIH R21 AR075127-02), the National Science Foundation (NSF GRFP), and the National Institute for Occupational Safety and Health (NIOSH) / Centers for Disease Control and Prevention (CDC) (Training Grant T42OH008429).